os : rocky linux

1. 스토리지 성능 테스트에 사용될 도구는 fio 설치 방법은 yum으로

|

1 |

[root@block1 ~]# yum -y install fio |

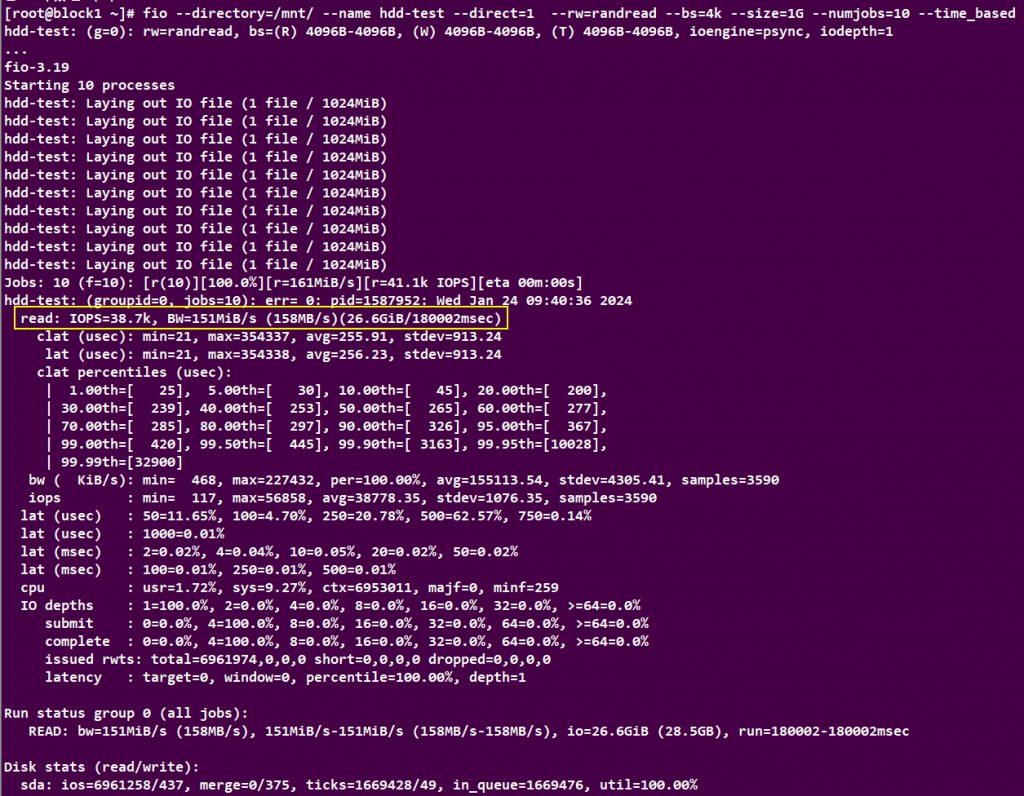

2. 순차 랜섬 읽기 ( 무작위 읽기) 블록 크기는 4k , Direct I/O 파일크기는 1G로 설정하고 진행 ( 아래 mnt 마운트는 일반 SATA SSD의 값이다)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

[root@block1 ~]# fio --directory=/mnt/ --name hdd-test --direct=1 --rw=randread \ --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap hdd-test: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1 ... fio-3.19 Starting 10 processes hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) Jobs: 10 (f=10): [r(10)][100.0%][r=161MiB/s][r=41.1k IOPS][eta 00m:00s] hdd-test: (groupid=0, jobs=10): err= 0: pid=1587952: Wed Jan 24 09:40:36 2024 read: IOPS=38.7k, BW=151MiB/s (158MB/s)(26.6GiB/180002msec) clat (usec): min=21, max=354337, avg=255.91, stdev=913.24 lat (usec): min=21, max=354338, avg=256.23, stdev=913.24 clat percentiles (usec): | 1.00th=[ 25], 5.00th=[ 30], 10.00th=[ 45], 20.00th=[ 200], | 30.00th=[ 239], 40.00th=[ 253], 50.00th=[ 265], 60.00th=[ 277], | 70.00th=[ 285], 80.00th=[ 297], 90.00th=[ 326], 95.00th=[ 367], | 99.00th=[ 420], 99.50th=[ 445], 99.90th=[ 3163], 99.95th=[10028], | 99.99th=[32900] bw ( KiB/s): min= 468, max=227432, per=100.00%, avg=155113.54, stdev=4305.41, samples=3590 iops : min= 117, max=56858, avg=38778.35, stdev=1076.35, samples=3590 lat (usec) : 50=11.65%, 100=4.70%, 250=20.78%, 500=62.57%, 750=0.14% lat (usec) : 1000=0.01% lat (msec) : 2=0.02%, 4=0.04%, 10=0.05%, 20=0.02%, 50=0.02% lat (msec) : 100=0.01%, 250=0.01%, 500=0.01% cpu : usr=1.72%, sys=9.27%, ctx=6953011, majf=0, minf=259 IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% issued rwts: total=6961974,0,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=1 Run status group 0 (all jobs): READ: bw=151MiB/s (158MB/s), 151MiB/s-151MiB/s (158MB/s-158MB/s), io=26.6GiB (28.5GB), run=180002-180002msec Disk stats (read/write): sda: ios=6961258/437, merge=0/375, ticks=1669428/49, in_queue=1669476, util=100.00% |

read IOPS = 38.7k / BW=151MiB/s (158MB/s)(26.6GiB/180002msec)

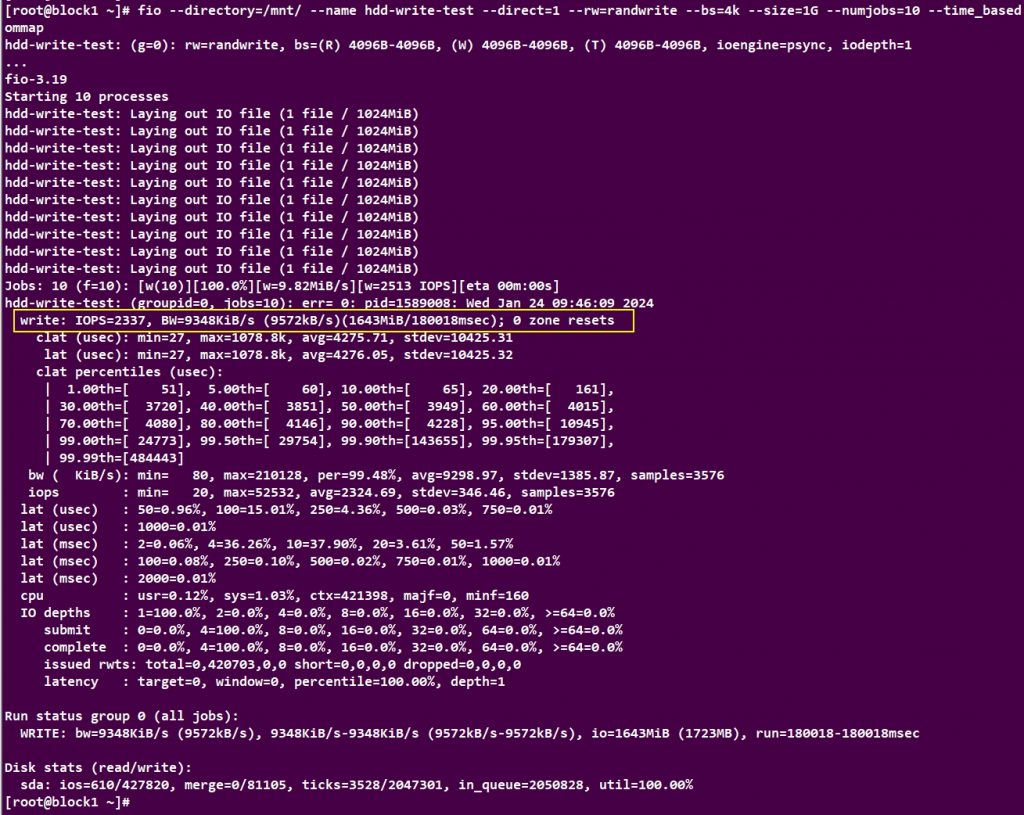

3. 순차 랜섬 쓰기 ( 무작위 쓰기) 블록 크기는 4k , Direct I/O 파일크기는 1G로 설정하고 진행 ( 아래 mnt 마운트는 일반 SATA SSD의 값이다)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 |

[root@block1 ~]# fio --directory=/mnt/ --name hdd-write-test --direct=1 --rw=randwrite \ --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap hdd-write-test: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1 ... fio-3.19 Starting 10 processes hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) Jobs: 10 (f=10): [w(10)][100.0%][w=9.82MiB/s][w=2513 IOPS][eta 00m:00s] hdd-write-test: (groupid=0, jobs=10): err= 0: pid=1589008: Wed Jan 24 09:46:09 2024 write: IOPS=2337, BW=9348KiB/s (9572kB/s)(1643MiB/180018msec); 0 zone resets clat (usec): min=27, max=1078.8k, avg=4275.71, stdev=10425.31 lat (usec): min=27, max=1078.8k, avg=4276.05, stdev=10425.32 clat percentiles (usec): | 1.00th=[ 51], 5.00th=[ 60], 10.00th=[ 65], 20.00th=[ 161], | 30.00th=[ 3720], 40.00th=[ 3851], 50.00th=[ 3949], 60.00th=[ 4015], | 70.00th=[ 4080], 80.00th=[ 4146], 90.00th=[ 4228], 95.00th=[ 10945], | 99.00th=[ 24773], 99.50th=[ 29754], 99.90th=[143655], 99.95th=[179307], | 99.99th=[484443] bw ( KiB/s): min= 80, max=210128, per=99.48%, avg=9298.97, stdev=1385.87, samples=3576 iops : min= 20, max=52532, avg=2324.69, stdev=346.46, samples=3576 lat (usec) : 50=0.96%, 100=15.01%, 250=4.36%, 500=0.03%, 750=0.01% lat (usec) : 1000=0.01% lat (msec) : 2=0.06%, 4=36.26%, 10=37.90%, 20=3.61%, 50=1.57% lat (msec) : 100=0.08%, 250=0.10%, 500=0.02%, 750=0.01%, 1000=0.01% lat (msec) : 2000=0.01% cpu : usr=0.12%, sys=1.03%, ctx=421398, majf=0, minf=160 IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% issued rwts: total=0,420703,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=1 Run status group 0 (all jobs): WRITE: bw=9348KiB/s (9572kB/s), 9348KiB/s-9348KiB/s (9572kB/s-9572kB/s), io=1643MiB (1723MB), run=180018-180018msec Disk stats (read/write): sda: ios=610/427820, merge=0/81105, ticks=3528/2047301, in_queue=2050828, util=100.00% |

write: IOPS=2337, BW=9348KiB/s (9572kB/s)(1643MiB/180018msec)

옵션은 아래에서 설명

directory : 디렉토리 경로 설정

name = 파일 이름

direct=[bool] : True 일 경우 Direct I/O로 수행합니다. False 일 경우 Buffered I/O로 수행합니다.

rw=[I/O 종류] : write / read / randwrite / randread / readwrite (rw) / randrw

bs = I/O 장치의 블록 크기입니다. 기본값: 4k. 읽기 및 쓰기 값은 read , write 형식으로 별도로 지정할 수 있습니다

size= job 당 총 파일 사이즈를 설정합니다.

numjobs=[int] : job 개수를 설정합니다. (동일한 워크로드를 동시에 실행, multi processes / threads)

time_based : runtime 시간만큼 I/O 를 실행합니다. (파일 사이즈가 필요 없습니다.)

runtime=[int] : 테스트 진행 시간을 설정합니다. (초 단위)

group_reporting : Job 별 리포팅이 아닌 그룹 별 리포팅 출력합니다

norandommap : Random 워크로드 시 I/O 위치를 과거 I/O 위치를 고려하지 않습니다.

추가적으로 NVME 성능을 동일하게 테스트해보면

랜덤 읽기 IOPS=126k, BW=494MiB/s (518MB/s

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

[root@localhost free]# fio --directory=/mnt/ --name hdd-test --direct=1 --rw=randread \ --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap hdd-test: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1 ... fio-3.19 Starting 10 processes hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) hdd-test: Laying out IO file (1 file / 1024MiB) Jobs: 10 (f=10): [r(10)][100.0%][r=495MiB/s][r=127k IOPS][eta 00m:00s] hdd-test: (groupid=0, jobs=10): err= 0: pid=68472: Tue Jan 23 03:19:48 2024 read: IOPS=126k, BW=494MiB/s (518MB/s)(86.8GiB/180001msec) clat (usec): min=46, max=3433, avg=77.84, stdev=26.27 lat (usec): min=46, max=3434, avg=77.93, stdev=26.27 clat percentiles (usec): | 1.00th=[ 55], 5.00th=[ 55], 10.00th=[ 56], 20.00th=[ 57], | 30.00th=[ 59], 40.00th=[ 63], 50.00th=[ 75], 60.00th=[ 79], | 70.00th=[ 86], 80.00th=[ 94], 90.00th=[ 110], 95.00th=[ 128], | 99.00th=[ 163], 99.50th=[ 180], 99.90th=[ 231], 99.95th=[ 379], | 99.99th=[ 433] bw ( KiB/s): min=490763, max=522776, per=100.00%, avg=506711.95, stdev=642.43, samples=3580 iops : min=122690, max=130694, avg=126677.89, stdev=160.61, samples=3580 lat (usec) : 50=0.03%, 100=85.25%, 250=14.64%, 500=0.08%, 750=0.01% lat (usec) : 1000=0.01% lat (msec) : 2=0.01%, 4=0.01% cpu : usr=3.21%, sys=9.83%, ctx=22765486, majf=0, minf=139 IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% issued rwts: total=22765473,0,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=1 Run status group 0 (all jobs): READ: bw=494MiB/s (518MB/s), 494MiB/s-494MiB/s (518MB/s-518MB/s), io=86.8GiB (93.2GB), run=180001-180001msec Disk stats (read/write): nvme0n1: ios=22735956/14, merge=0/90, ticks=1551568/5, in_queue=1551573, util=100.00% |

랜덤쓰기 IOPS=622k, BW=2429MiB/s (2547MB/s)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

[root@localhost free]# fio --directory=/mnt/ --name hdd-write-test --direct=1 --rw=randwrite \ --bs=4k --size=1G --numjobs=10 --time_based --runtime=180 --group_reporting --norandommap hdd-write-test: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=psync, iodepth=1 ... fio-3.19 Starting 10 processes hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) hdd-write-test: Laying out IO file (1 file / 1024MiB) Jobs: 10 (f=10): [w(10)][100.0%][w=2444MiB/s][w=626k IOPS][eta 00m:00s] hdd-write-test: (groupid=0, jobs=10): err= 0: pid=68804: Tue Jan 23 03:45:40 2024 write: IOPS=622k, BW=2429MiB/s (2547MB/s)(427GiB/180001msec); 0 zone resets clat (usec): min=11, max=4138, avg=15.51, stdev= 6.17 lat (usec): min=11, max=4138, avg=15.61, stdev= 6.18 clat percentiles (usec): | 1.00th=[ 13], 5.00th=[ 13], 10.00th=[ 14], 20.00th=[ 14], | 30.00th=[ 15], 40.00th=[ 15], 50.00th=[ 15], 60.00th=[ 16], | 70.00th=[ 16], 80.00th=[ 17], 90.00th=[ 18], 95.00th=[ 21], | 99.00th=[ 28], 99.50th=[ 29], 99.90th=[ 38], 99.95th=[ 41], | 99.99th=[ 318] bw ( MiB/s): min= 1352, max= 2637, per=100.00%, avg=2433.38, stdev=21.15, samples=3590 iops : min=346148, max=675132, avg=622944.34, stdev=5414.58, samples=3590 lat (usec) : 20=93.82%, 50=6.16%, 100=0.01%, 250=0.01%, 500=0.01% lat (usec) : 750=0.01%, 1000=0.01% lat (msec) : 2=0.01%, 4=0.01%, 10=0.01% cpu : usr=6.80%, sys=26.14%, ctx=111942105, majf=0, minf=125 IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% issued rwts: total=0,111941743,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=1 Run status group 0 (all jobs): WRITE: bw=2429MiB/s (2547MB/s), 2429MiB/s-2429MiB/s (2547MB/s-2547MB/s), io=427GiB (459GB), run=180001-180001msec Disk stats (read/write): nvme0n1: ios=0/111862380, merge=0/53139, ticks=0/1182439, in_queue=1182439, util=100.00% |

일반 SSD와 NVME 성능 차이가 많다는 것을 확인할 수 있다