O/S 환경 : Centos 7.x

인프라 환경 : on premise

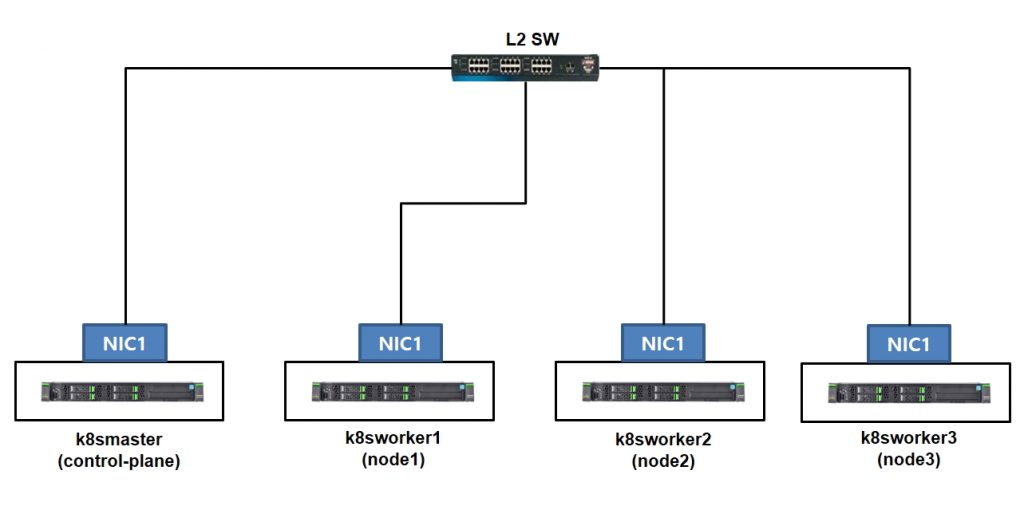

서버 구성 : 총 4대 (master 1대 / worker 노드3대) /

master서버는 전체 컨트롤을 하는 서버이고 worker 3대는 실제 pods가 운영되는 서버

1. 호스트네임 수정 (master, workernode 모두 해당)

|

1 2 3 4 5 6 7 |

[root@localhost ~]# vi /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 192.168.0.195 k8smaster 192.168.0.196 k8sworker1 192.168.0.197 k8sworker2 192.168.0.198 k8sworker3 |

2. 마스터서버에서 호스트네임지정

|

1 |

[root@localhost ~]# hostnamectl set-hostname k8smaster |

3. 워커노드 1~3번서버에서 호스트네임 수정

|

1 2 3 4 5 6 7 8 |

### k8sworker1 [root@localhost ~]# hostnamectl set-hostname k8sworker1 ### k8sworker2 [root@localhost ~]# hostnamectl set-hostname k8sworker2 ### k8sworker3 [root@localhost ~]# hostnamectl set-hostname k8sworker3 |

4. master,worker 노드 모두 작업 진행 ( selinux 중지 및 방화벽 중지 )

|

1 2 3 4 5 6 7 8 9 |

[root@localhost ~]# setenforce 0 setenforce: SELinux is disabled [root@localhost ~]# sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux ### 방화벽 중지 [root@localhost ~]# systemctl disable firewalld Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service. |

만약 방화벽을 사용하고 싶다면 아래 해당 포트들을 허용해줘야 한다 (master,worker 모두 해당

|

1 2 3 4 5 6 7 |

# firewall-cmd --permanent --add-port=6443/tcp # firewall-cmd --permanent --add-port=2379-2380/tcp # firewall-cmd --permanent --add-port=10250/tcp # firewall-cmd --permanent --add-port=10251/tcp # firewall-cmd --permanent --add-port=10252/tcp # firewall-cmd --permanent --add-port=10255/tcp # firewall-cmd –reload |

5. 스왑이 활성화 되어 있다면 off 진행 (모든서버)

|

1 2 3 4 5 6 7 |

[root@localhost ~]# swapoff -a [root@localhost ~]# vi /etc/fstab #swap 부분주석처리 #/dev/mapper/centos-swap swap swap defaults 0 0 |

6. 기본환경설정 맞췄으면 모든 서버 한번 리부팅

|

1 |

[root@localhost ~]# init 6 |

7. kubernetes.repo 추가 ( master,worker 모든서버 대상)

|

1 2 3 4 5 6 7 8 9 |

[root@k8smaster ~]# vi /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg |

8. yum utils 설치 진행 ( master,worker 모든서버 대상)

|

1 |

[root@k8smaster ~]# yum -y install yum-utils |

9. docker repo 추가 ( master,worker 모든서버 대상)

|

1 2 3 4 5 |

[root@k8smaster ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo ### 확인 [root@k8smaster ~]# ls -l /etc/yum.repos.d/docker-ce.repo -rw-r--r-- 1 root root 1919 7월 31 23:46 /etc/yum.repos.d/docker-ce.repo |

10. docker , kubeadm , containerd 설치 (master,worker 모든서버 대상)

|

1 |

[root@k8smaster ~]# yum install docker-ce docker-ce-cli containerd.io docker-compose-plugin kubeadm |

11. 서비스 활성화 (master,worker 모든서버 대상)

|

1 2 3 4 5 6 7 |

### kubelt [root@k8smaster ~]# systemctl enable kubelet && systemctl start kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. ### docker [root@k8smaster ~]# systemctl enable docker && systemctl start docker Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service. |

12. master 서버에서 작업 (master server only ) / netfilter 추가 및 nf-call 수정

|

1 2 3 4 5 6 7 8 9 10 |

[root@localhost ~]# modprobe br_netfilter [root@localhost ~]# vi /etc/sysctl.conf net.ipv4.ip_forward=1 ### sysctl 적용 [root@localhost ~]# sysctl -p net.ipv4.ip_forward = 1 [root@localhost ~]# echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables |

13. containerd 서비스 등록 및 시작 ( master 서버에서 작업 master server only )

|

1 2 |

[root@k8smaster ~]# systemctl start containerd && systemctl enable containerd Created symlink from /etc/systemd/system/multi-user.target.wants/containerd.service to /usr/lib/systemd/system/containerd.service. |

14. containerd 파일 삭제 그냥해도 되는데 추후 작업시 에러발생때문에 사전에 환경설정 삭제 및 시작 ( master 서버에서 작업 master server only )

|

1 2 3 4 |

[root@k8smaster ~]# rm -f /etc/containerd/config.toml ### 컨테이너 재시작 [root@k8smaster ~]# systemctl restart containerd |

15. kubeadm 이용하여 설치 진행 (master 서버에서 작업 master server only)

해당 작업시 에러가 발생되는 경우 소켓이 정상인지 파일이 이미 있는지 에러 문구를 확인 후 조치 후 다시 작업해야함

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

[root@k8smaster ~]# kubeadm init [init] Using Kubernetes version: v1.28.0 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W0822 16:36:32.923127 10005 checks.go:835] detected that the sandbox image "registry.k8s.io/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" --생략 [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i ㅣㅣ /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.0.195:6443 --token 21z1aa.orvl3b2hxs2nyhw7 \ --discovery-token-ca-cert-hash sha256:defccba8cd1fe7b9e994876109f7a136bdfa28ae1bc0bb375bf55a49149ac90a 여 |

위 설명대로 환경설정 복사를 진행하면 된다 (master 서버에서 작업 master server only)

|

1 2 3 4 5 6 7 |

[root@k8smaster ~]# mkdir -p $HOME/.kube [root@k8smaster ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@k8smaster ~]# chown $(id -u):$(id -g) $HOME/.kube/config [root@k8smaster ~]# export KUBECONFIG=/etc/kubernetes/admin.conf |

16. 이제 쿠버네틱스에서 네트워크 설정을 잡아줘야 하는데 이게 너무 어렵다 보니 yaml 파일을 이용해서 네트워크 설정 calico 이용 (master에서만 작업)

|

1 2 3 4 |

[root@k8smaster ~]# curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/calico.yaml -O % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 100 238k 100 238k 0 0 1044k 0 --:--:-- --:--:-- --:--:-- 1043k |

17 .다운받았으면 kebectl 명령어를 이용해서 생성 (master에서만 작업)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

[root@k8smaster ~]# kubectl apply -f calico.yaml poddisruptionbudget.policy/calico-kube-controllers created serviceaccount/calico-kube-controllers created serviceaccount/calico-node created serviceaccount/calico-cni-plugin created configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created daemonset.apps/calico-node created deployment.apps/calico-kube-controllers created |

여기까지도 정상적으로 설치가 되었다면 마스터 서버는 모든 작업이 완료된것이다

18. 준비가 되었는지 확인

|

1 2 3 |

[root@k8smaster ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8smaster Ready control-plane 4m29s v1.28.0 |

Ready 가 되어 있다면 정상적으로 설치 완료

여기서부터는 worker1~3번서버에서의 작업 / 방화벽은 모두 중지 후 작업을 진행한다

방화벽 관련 포트는 따로 정리해서 올릴테니 우선 테스트환경이니 방화벽은 중지 후 진행

방화벽을 등록한다면 이 글에서4번의 항목을 확인하면 된다

19. 방화벽 중지 및 netfilter 등록 ( worker1~3번서버에서만 작업)

|

1 2 3 4 5 6 7 8 9 10 11 |

[root@k8sworker1 ~]# iptables -F [root@k8sworker1 ~]# modprobe br_netfilter [root@k8sworker1 ~]# vi /etc/sysctl.conf net.ipv4.ip_forward=1 [root@k8sworker1 ~]# sysctl -p net.ipv4.ip_forward = 1 [root@k8sworker1 ~]# echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables |

20. containerd 환경설정 파일 삭제 및 재시작 ( worker1~3번서버에서만 작업)

|

1 2 3 |

[root@k8sworker1 ~]# rm -f /etc/containerd/config.toml [root@k8sworker1 ~]# systemctl restart containerd |

21. kubeadm을 이용하여 master 서버에 join (worker1~3번서버에서만 작업) / 상단 master에서 init 작업후에 나온 값을 이용 그대로 복사

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[root@k8sworker1 ~]# kubeadm join 192.168.0.195:6443 --token 21z1aa.orvl3b2hxs2nyhw7 --discovery-token-ca-cert-hash sha256:defccba8cd1fe7b9e994876109f7a136bdfa28ae1bc0bb375bf55a49149ac90a [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. |

위와 같이 출력되어야 정상적으로 join 이 된것이다 여기서 에러가 발생되면 원인이 무엇인지 로그 내용을 보고 수정하면 된다

22. 3개의 worker 노드가 생성되었는지 확인 (master 에서 작업)

|

1 2 3 4 5 6 |

[root@k8smaster ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8smaster Ready control-plane 21m v1.28.0 k8sworker1 Ready <none> 5m7s v1.28.0 k8sworker2 Ready <none> 2m52s v1.28.0 k8sworker3 Ready <none> 2m34s v1.28.0 |

23. 간단하게 nginx를 이용해서 3개의 웹서버를 만들어 보자 (master 에서 작업)

|

1 2 3 4 5 6 |

[root@k8smaster ~]# kubectl run nginx-web1 --image=nginx pod/nginx-web1 created [root@k8smaster ~]# kubectl run nginx-web2 --image=nginx pod/nginx-web2 created [root@k8smaster ~]# kubectl run nginx-web3 --image=nginx pod/nginx-web3 created |

24. 동작중인지 pod 확인

|

1 2 3 4 5 |

[root@k8smaster ~]# kubectl get pods NAME READY STATUS RESTARTS AGE nginx-web1 1/1 Running 0 16s nginx-web2 1/1 Running 0 13s nginx-web3 1/1 Running 0 9s |

25. 좀더 자세하게 아이피까지 확인하려면 -o wide 옵션 사용 (master에서 작업)

|

1 2 3 4 5 |

[root@k8smaster ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-web1 1/1 Running 0 38s 172.16.137.2 k8sworker3 <none> <none> nginx-web2 1/1 Running 0 35s 172.16.230.193 k8sworker1 <none> <none> nginx-web3 1/1 Running 0 31s 172.16.8.2 k8sworker2 <none> <none> |

26. 웹페이지의 index.html 파일을 한번 수정해 보자 kubectl exec 명령어 이용 ( master에서 작업)

(명령어 부분은 추후 다시 한번 정리하니 우선 테스트 환경이니 그냥 따라하기 )

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[root@k8smaster ~]# kubectl exec nginx-web1 -it -- /bin/bash root@nginx-web1:/# echo "nginx-web1 server" > /usr/share/nginx/html/index.html root@nginx-web1:/# exit exit [root@k8smaster ~]# kubectl exec nginx-web2 -it -- /bin/bash root@nginx-web2:/# echo "nginx-web2 server" > /usr/share/nginx/html/index.html root@nginx-web2:/# exit exit [root@k8smaster ~]# kubectl exec nginx-web3 -it -- /bin/bash root@nginx-web3:/# echo "nginx-web3 server" > /usr/share/nginx/html/index.html root@nginx-web3:/# exit exit |

위 내용은 kubectl exec 를 이용하여 pods의 값을 입력 -it — /bin/bahs 는 해당 서버로 접속

이제 curl를 이용해서 nginx web서버를 접속 테스트 해보자

|

1 2 3 4 5 6 7 8 |

[root@k8smaster ~]# curl 172.16.137.2 nginx-web1 server [root@k8smaster ~]# curl 172.16.230.193 nginx-web2 server [root@k8smaster ~]# curl 172.16.8.2 nginx-web3 server |

정상적으로 출력된 것을 확인 할 수 있다

테스트로 만든거 삭제 1개씩 삭제를 할때는 pod 이름

|

1 2 |

[root@k8smaster ~]# kubectl delete pods nginx-web1 pod "nginx-web1" deleted모 |

모든 pod 삭제시

|

1 2 3 |

[root@k8smaster ~]# kubectl delete pods --all pod "nginx-web2" deleted pod "nginx-web3" deleted |

https://xinet.kr/?p=3791 kubernetes bash 자동완성